My Projects

You may click on a project's title to see its github repository.

A Car Buying Guide (The Data Incubator Capstone Project)

It is always a huge hassle to decide when looking for a used car. There are so many options to consider such as owner reviews, common technical issues, and local price of a car. Moreover, potential buyers might end up comparing it with other years or conditions of the same car as well as its price in neighbors for a better informed decision.

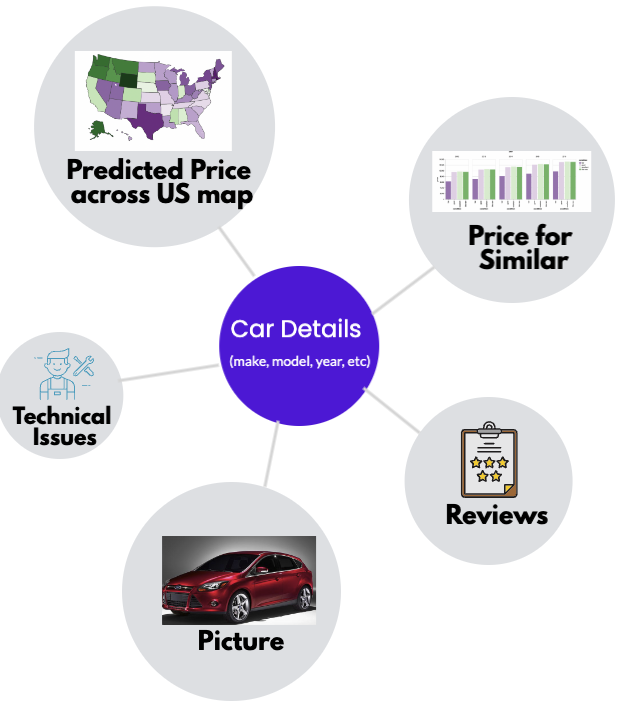

This project aims to guide people in their search for a used car. It brings the following for its users:

- a picture of the car,

- the summary of owner reviews,

- the most 6 common technical issues reported by the owners,

- a bar-chart that compares the predicted average price for different year and condition combinations of the same car,

- and finally plots the predicted prices across the states on the U.S. map.

Data:

- Kaggle: The data is obtained from kaggle.com. It is apprx 1.4 GB CSV and includes the criagslist ads of apprx 500,000 vehicles. It consists of listing price, manufacturer, model, year, odometer, condition, transmission, region, state, VIN number, ad id, cylinders, fuel, drive type, lat, long, and description.

- NewCarTestDrive and Google: For each car, its MSRP value is scraped from NewCarTestDrive.com if it is available there, otherwise from Google.com. (Including the MSRP values, improved the MAE of the best performing model by 68%, decreasing it from 1440 to 453.)

Model:

Among many estimators, a GroupbyEstimator that uses different Random Forest Regressors for each manufacturer group is adopted. It yields an R2 value of %98.75 (MAE: 453), while it is 92% (MAE: 941) on the test set. 3 different predictors (Linear Regression, Ridge Regression, and Random Forest Regressor) are used in 3 three different settings (A GroupbyEstiamtor for 'state' and 'manufacturer each, and one model for the entire dataset. The best performing model out of the 9 is adopted.

Day Trading with Deep Learning

Candlestick chart is the most common way to observe the historical prices of a financial asset. Unlike line charts, by looking at a candlestick, one can identify an asset’s opening and closing prices, highs and lows, and overall range for a specific time frame. Despite the Efficient Market Hypothesis, this project uses the history of candle stick formation of the daily prices of a stock to predict whether it is a good time for a day-trade.

It would be unfair to expect shocking results from such a simple analysis, however, given that the data is freely available, this is a nice project to exersice reading big data from directory while training a deep network model. Moreover, it is possible to incorporate GDELT data to this model for a more sophisticated version.

Data:

- NASDAQ: The raw data is obtained from nasdaq.com. It consists of daily high, low, open, and close prices of the 220 stocks between 2015 August - 2020 July, which are listed in SP500.

- For every 22 day window, an image of its corresponding candle stick chart is created along with a bollinger bands and moving average on it. If a day trade in the 23rd day results in a profit greater than 0.5%, it is labeled as 1, if it yields a loss more than -0.5% labeled as -1, and 0 otherwise.

Model:

Utilized Keras to train a Convolutional Neural Network (CNN) with apprx. 4 GB image data in batches. Since it is not possilbe to load all the data to the memory, the model is trained by reading from the directory in batches. As expected, a CNN model is uninformative when fed in with candle stick images since they do not contain systematic local patterns that can be used for prediction.

Natural Language Processing with Disaster Tweets

This particular challenge is perfect for data scientists looking to get started with Natural Language Processing. This project builds multiple machine learning models that predict which Tweets are about real disasters and which ones aren’t, using different cleaning processes, vectorization methods, and predictors. The competition description is summarized below. You may visit kaggle for more details about it.

Twitter has become an important communication channel in times of emergency. The ubiquitousness of smartphones enables people to announce an emergency they’re observing in real-time. Because of this, more agencies are interested in programatically monitoring Twitter (i.e. disaster relief organizations and news agencies). But, it’s not always clear whether a person’s words are actually announcing a disaster.

Data:

- Kaggle: 10,000 tweets that were hand classified

Model:

There are 12 different models that use Stemming or Lemmatizing during data cleaning process; Count, N-gram, or TfIdf vectorization methods; and finally Gradient Boost or Random Forest Classifier as a predictor. Tuning the hyper-parameters and comparing the model, I find that the best model is the one that uses Porter stemming in data cleaning, Count vectorization, and a Random Forest Classifier as a predcitor, which yields 79.3% accuracy, 85.8% precision and 62% recall.

Creating a Python Package

As a part of the Udacity's AWS ML Foundations Course's in class exercises, I created a Python package that has General, Gaussian, and Binomial Distribution Classes and uses object oriented programming to create a Python package.

Snake Game:

Bring back the childhood memories!.. This project programs our childhood's game, Snake, in Python using PyGame package.

My Research

A Dynamic Model of Mixed Duopolistic Competition: Open Source vs. Proprietary Innovation

We model the competition between a proprietary firm and an open source rival, by incorporating the nature of the GPL, investment opportunities by the proprietary firm, user-developers who can invest in the open source development, and a ladder type technology. We use a two-period dynamic mixed duopoly model, in which a profit-maximizing proprietary firm competes with a rival, the open source firm, which prices the product at zero, with the quality levels determining their relative positions over time. We analyze how the existence of open source firm affects the investment and the pricing behavior of the proprietary firm. We also study the welfare implications of the existence of the open source rival. We find that, under some conditions, the existence of an open source rival may decrease the total welfare.

High Speed TIQ Flash ADC Designing Tool with Supervised Learning

The design of TIQ Flash ADC requires extensive amount of data. This work introduces a new tool that decreases the amount of data needs to be created. The proposed tool utilizes supervised learning to approximate required data, and returns a set of comparators for ADC design. It is observed that the approximation error is a few hundreds of μV for resulting set. Moreover, it allows the designer to predict the power, speed, and precision values of a potential design.

Optimal Disclosure Policies Under Endogenous Valuation Distributions

In this paper, I analyze the best information disclosure policy that an auctioneer can adopt according to different performance measures, namely players' payoffs, prize allocation efficiency, and aggregate effort. The significant feature of the analysis is that players have the ability to choose the distribution from which their own types are drawn. Using a two-player all-pay auction with two types setting, I show that the optimal disclosure policy depends on the ratio of the value of winning for a low type to the value of winning for a high type.

Optimal Value Distributions in All-Pay Auctions

This paper analayzes a two-player two-stage asymmetric all-pay auction where the players choose a distribution over a common valuation set in the first stage, which then determines their valuation of winning in the auction stage. After observing their opponent's choice of distribution and their realized valuations, players play an all-pay auction in the second stage of the game. The equilibrium outcome of the game is characterized and in this outcome we show that one player assigns probability 1 to the highest type whereas the other player shares his probability equally between the highest and the lowest type in the support.